There’s a variety of strategies for backing up your data and no single solution is perfect for everyone. Some people may use commercial services like Jungle Disk, Mozy, or Carbonite. Other’s may choose to use services such as Amazon’s Simple Storage Service (S3) and tools such as Duplicity. Whatever your preference, the ultimate goal is to protect your data against loss, theft, and natural disasters. In this post, we’ll cover how you can implement encrypted offsite backups using Duply, Duplicity, SSHFS, and Rsync.

Background

I’ve explored a variety of different remote backup strategies and haven’t found one that’s a good fit for me. Mostly because I have around 250-300GB of data to backup and the monthly bill for that amount of data is more than I’m willing to spend. In general, my preference is to use the 3-2-1 strategy in that you have 3 copies of your data, in 2 different places, of which 1 of them is offsite.

When working on the solution, I had a few requirements including:

- All data must encrypted in transit and while at rest

- Must support full and incremental backups

- Must be cost effective and not result in recurring monthly bills

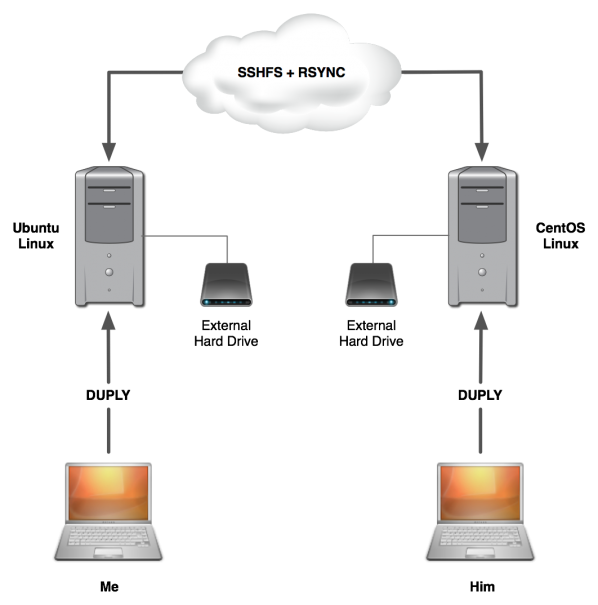

So what was the solution? Well, I collaborated with a colleague of mine and we setup remote backups to each others Linux servers using a few basic tools and two external hard drives. It takes a little more elbow grease than commercial solutions, but once you have it up and running it works pretty well and doesn’t cost an arm and a leg.

Backup Architecture

Technically, you could use whatever operating system you wish. I used Ubuntu and he used CentOS because that’s what we were familiar with. As long as it supports the tools, it should work just fine.

- Operating System: Linux-based OS (e.g., Ubuntu, CentOS, etc)

- Packages: openssh, gpg, duply, duplicity, sshfs, and rsync

- Hardware: 2TB Western Digital External Hard Drive

How it works

First, the client uses duply to backup data to a server on the local network. All data is encrypted in transit and compressed/signed/encrypted on the local server. The initial backup can take some time depending on how much data you have to bakcup, but incremental backups are much quicker.

Then I have a nightly cron job that executes a script that copies the data to my friend’s remote server. First, it uses SSHFS to create a mount point for the file system on my friend’s server, then it rsyncs the data over SSHFS, and finally unmounts the directory when complete. For additional security, we’ve implemented the OpenSSH ChrootDirectory configuration option to limit access.

We had originally attempted to backup without the local server, but found that trying to backup 250GB to a remote server over our internet connection was slow and often resulted in having to pause/cancel the transfer half way through. Not only that, but sometimes canceling the transfer resulted in corrupting the backup. Having the local server made things quicker and less prone to corruption issues.

The Guide

Here’s the basic set of steps to get this solution up and running for you. It assumes the use of Ubuntu, so you will need to tweak it according to your environment.

Step 1

Install and configure your server operating system to automatically mount your external hard drive. This hard drive will be used to store the local backups, or if you have the disk space, you don’t even need an external hard drive.

- Partition your external hard drive as needed

- Format it with ext4

- Configure /etc/fstab to automatically mount it as /data/

Step 2

Now let’s install and configure OpenSSH on the server.

sudo apt-get install openssh

In /etc/ssh/sshd_config:

Configure OpenSSH to use its internal SFTP subsystem.

Subsystem sftp internal-sftp

Then configure the chroot() matching rule for users in the “sftponly” group.

Match group sftponly ChrootDirectory /data/chroot/%u X11Forwarding no AllowTcpForwarding no ForceCommand internal-sftp

We created a specific directory for our user so it was obvious. Keep in mind that the directory in which to chroot() must be owned by root.

# chown root.root /data/chroot/your_user # usermod -d / your_uuser # adduser your_user sftponly

Now test to make sure that it all works.

sftp your_user@host

You should be able to login and only access the chroot directory. You should not be able to access file system locations outside the chroot directory.

A gotcha that we ran into is that the chroot directory for the user must be owned by root and cannot be writable to the user. So you need to create a directory within the chroot directory to store the backups.

mkdir backups

This will effectively create the directory /data/chroot/your_user/backups. Then you can make the directory writable to your user.

# chown your_user:your_user /data/chroot/your_user

Step 3

Install and configure the Fuse Sync script on the server. The script is responsible for mounting the remote filesystem using SSHFS and then syncing the files to the remote system using rsync. There configuration file includes comments for the different configuration parameters, most of which should be self-explanatory. The major features of this script include:

- Supports for custom sshd configuration files specific to your remote host connection

- Sends automatic emails with the results of the script

- Records backup logs

- Automatically mounts and unmounts SSHFS remote file systems

- Detects if the backup is already running and exits accordingly

Step 4

Install the necessary tools on the server including rsync and sshfs.

sudo apt-get install rsync fuse-utils sshfs

Step 5

Install the necessary tools on the client including duply, gpg, and duplicity. The process for installing these tools will vary based on operating system. You may use the following on a Ubuntu system:

sudo apt-get install duplicity duply rsync gpg

Mac users may wish to consider using MacPorts and Windows users may want to give Cygwin a shot.

Step 5

Since backups are encrypted/signed, you will need to generate a GPG key on the client.

gpg --gen-key

Remember the Key ID and password.

Step 6

Next, create a duply profile on the client and setup the basic configuration parameters.

duply offsite create

This will create the following files:

~/.duply/offsite/conf ~/.duply/offsite/exclude

The conf file is the configuration file and the exclude file is for specifying files you want to exclude from being backed up.

In ~/.duply/offsite/conf file:

Configure settings according to your environment. Here’s a sample.

# Your GPG Key ID you just created GPG_KEY='your GPG Key ID' # Your GPG password for this key GPG_PW='your GPG password' # The local server you are backing your files up to including the path TARGET='scp://username@your.local.host:22//your/backup/path/' # Location of files you want to backup SOURCE='/Users/dgm' # Refer to duply documentation for these MAX_AGE=6M MAX_FULL_BACKUPS=1 VERBOSITY=5 TEMP_DIR=/tmp

Step 7

You should be able to run the sync-fuse.sh script manually and it should work. To automate it, create a cron job on the server for your user.

# m h dom mon dow command 00 02 * * * /home/your_user/scripts/sync-fuse.sh >> /dev/null 2>&1

I’ve set it to run in the middle of the night so it doesn’t consume bandwidth during the day when I might be working, Hulu’ing, or Netflix’ing.

Step 8

Celebrate! You have setup encrypted offsite backups.

Not Working?

Let us know. We’re always looking for feedback that will help us improve the quality of our posts.

Additional Resources

- Duply Homepage

- Duplicity Homepage

- Fuse Sync on Github

Jason February 15, 2011 at 11:21 pm

While your GPG key generation step is obviously a simple one by necessity for example purposes, it’s important to generate a strong key for security. That means an RSA key of 2048 bits at minimum. GnuPG v2 will allow you to do this easily (choose option 1 RSA/RSA at the first prompt for –gen-key and then the default is 2048 bits). However systems using GnuPG v1.x which is still the vast majority of Linux/Unix systems, will by default create a 1024-bit DSA/ElGamal keypair. You can create a 2048-bit ElGamal key, but that actually it outside of the OpenPGP spec and isn’t interoperable with most things. To create a 2048-bit RSA key, you actually have to create it as “sign only” first and then to back into the key with ‘gpg –edit-key’ and add an encrypting sub-key.